We use Amazon EC2 for our Marmotta and PostgreSQL servers, with instances that are EBS-optimized. In the course of addressing performance issues, we've had to attach faster EBS volumes, upgrade our EC2 instance type, and enable Enhanced Networking to deal with I/O and networking bottlenecks. Enhanced Networking gives you theoretically faster network connectivity between servers, but also gives you an ethernet driver in the Linux kernel that supports irqbalance. This is significant to our discussion of balancing interrupt requests, on its dedicated page. EBS optimization allows an instance to get better throughput.

Amazon was throttling our storage I/O until we upgraded to EBS volumes (i.e. SAN storage volumes) that allowed for higher block interface request rates.

Ongoing slow write performance questions

This section is relevant to our page about addressing slow updates and inserts.

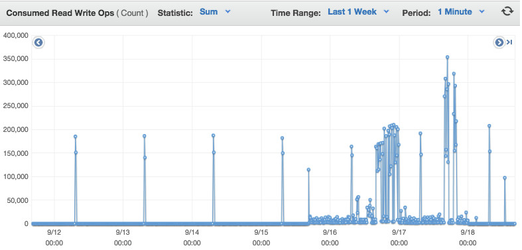

The EBS volume that houses Marmotta's triples table is currently of highest concern, because it is the one that gets hit hardest when we encounter periods of bad write performance. It's rated at 12,000 IOPS. Our CloudWatch chart for this volume shows it using a maximum of about 350K read/write operations per minute.

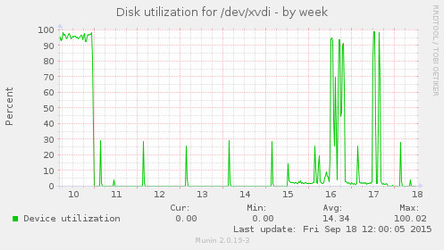

Meanwhile, our kernel statistics indicate high utilization for this device:

There's some possibility that, because of the way in which AWS's IOPS are calculated (considering not just the number of operations, but the size of each operation, which could vary), we're running up against a storage volume speed limitation again. Throwing faster hardware at this problem might make it go away for a while, but it still seems to us that the patterns documented in the bad insert / update performance page are more fundamental.